Overview & Development process

| This section has been updated to Bitcoin Core @ v23.0 |

| Whilst this disclaimer is present this documentation is considered a work in progress and may be subject to minor or major changes at any time without notice. |

| This documentation can temporarily be found hosted at bitcoincore.wtf, however the hosted location is likely to change in the near future. |

Contributor journeys

Some Contributors have documented their journeys into the space which lets us learn about approaches they found useful, and also any pitfalls and things they found difficult along the way.

Decentralized development

Olivia Lovenmark and Amiti Uttarwar describe in their blog post "Developing Bitcoin", how changes to bitcoin follow the pathway from proposal to being merged into the software, and finally into voluntary adoption by users choosing to use the software.

Developer guidelines

The Bitcoin Core project itself contains three documents of particular interest to Contributors:

-

CONTRIBUTING.md — How to get started contributing to the project. (Forking, creating branches, commit patches)

-

developer-notes.md — Development guidelines, coding style etc.

-

productivity.md — Many tips for improving developer productivity (ccache, reviewing code, refspecs, git diffs)

-

test/README.md — Guidance on running the test suite

Using ccache as described in productivity.md above will speed up builds of Bitcoin Core dramatically.

|

| Setting up a ramdisk for the test suite as described in test/README.md will speed up running the test suite dramatically. |

Development workflow

Bitcoin Core uses a GitHub-based workflow for development. The primary function of GitHub in the workflow is to discuss patches and connect them with review comments.

While some other prominent projects, e.g. the Linux kernel, use email to solicit feedback and review, Bitcoin Core has used GitHub for many years. Initially, Satoshi distributed the code through private emails and hosting source archives at bitcoin.org, and later by hosting on SourceForge (which used SVN but did not at that time have a pull request system like GitHub). The earliest reviewers submitted changes using patches either through email exchange with Satoshi, or by posting them on the bitcoin forum.

In August 2009, the source code was moved to GitHub by Sirius, and development has remained there and used the GitHub workflows ever since.

Use of GitHub

The GitHub side of the Bitcoin Core workflow for Contributors consists primarily of:

-

Issues

-

PRs

-

Reviews

-

Comments

Generally, issues are used for two purposes:

-

Posting known issues with the software, e.g., bug reports, crash logs

-

Soliciting feedback on potential changes without providing associated code, as would be required in a PR.

GitHub provides their own guide on mastering Issues which is worth reading to understand the feature-set available when working with an issue.

PRs are where Contributors can submit their code against the main codebase and solicit feedback on the concept, the approach taken for the implementation, and the actual implementation itself.

PRs and Issues are often linked to/from one another:

One common workflow is when an Issue is opened to report a bug. After replicating the issue, a Contributor creates a patch and then opens a PR with their proposed changes.

In this case, the Contributor should, in addition to comments about the patch, reference that the patch fixes the issue. For a patch which fixes issue 22889 this would be done by writing "fixes #22889" in the PR description or in a commit message. In this case, the syntax "fixes #issue-number" is caught by GitHub’s pull request linker, which handles the cross-link automatically.

Another use-case of Issues is soliciting feedback on ideas that might require significant changes. This helps free the project from having too many PRs open which aren’t ready for review and might waste reviewers' time. In addition, this workflow can also save Contributors their own valuable time, as an idea might be identified as unlikely to be accepted before the contributor spends their time writing the code for it.

Most code changes to bitcoin are proposed directly as PRs — there’s no need to open an Issue for every idea before implementing it unless it may require significant changes. Additionally, other Contributors (and would-be Reviewers) will often agree with the approach of a change, but want to "see the implementation" before they can really pass judgement on it.

GitHub is therefore used to help store and track reviews to PRs in a public way.

Comments (inside Issues, PRs, Projects etc.) are where all (GitHub) users can discuss relevant aspects of the item and have history of those discussions preserved for future reference. Often Contributors having "informal" discussions about changes on e.g. IRC will be advised that they should echo the gist of their conversation as a comment on GitHub, so that the rationale behind changes can be more easily determined in the future.

Reviewing code

Jon Atack provides a guide to reviewing a Bitcoin Core PR in his article How To Review Pull Requests in Bitcoin Core.

Gloria Zhao’s review checklist details what a "good" review might look like, along with some examples of what she personally considers good reviews. In addition to this, it details how potential Reviewers can approach a new PR they have chosen to review, along with the sorts of questions they should be asking (and answering) in order to provide a meaningful review. Some examples of the subject areas Gloria covers include the PR’s subject area, motivation, downsides, approach, security and privacy risks, implementation of the idea, performance impact, concurrency footguns, tests and documentation needed.

Contributing code

This section details some of the processes surrounding code contributions to the Bitcoin Core project along with some common pitfalls and tips to try and avoid them.

Branches

You should not use the built-in GitHub branch creation process, as this interferes with and confuses the Bitcoin Core git process.

Commit messages

When writing commit messages be sure to have read Chris Beams' "How to Write a Git Commit Message" blog post. As described in CONTRIBUTING.md, PRs should be prefixed with the component or area the PR affects. Common areas are listed in CONTRIBUTING.md section: Creating the pull request. Individual commit messages are also often given similar prefixes in the commit title depending on which area of the codebase the changes primarily affect.

Creating a PR

Jon Atack’s article How To Contribute Pull Requests To Bitcoin Core describes some less-obvious requirements that any PR you make might be subjected to during peer review, for example that it needs an accompanying test, or that an intermediate commit on the branch doesn’t compile. It also describes the uncodified expectation that Contributors should not only be writing code, but perhaps more importantly be providing reviews on other Contributors' PRs. Most developers enjoy writing their own code more than reviewing code from others, but the decentralized review process is arguably the most critical defence Bitcoin development has against malicious actors and therefore important to try and uphold.

| Jon’s estimates of "5-15 PR reviews|issues solved" per PR submitted is not a hard requirement, just what Jon himself feels would be best for the project. Don’t be put off submitting a potentially valuable PR just because "you haven’t done enough reviews"! |

Maintaining a PR

Once you have a PR open you should expect to receive feedback on it which may require you to make changes and update the branch. For guidance on how to maintain an open PR using git, such as how to redo commit history, as well as edit specific commits, check out this guide from Satsie.

Once you’ve made the changes locally you can force-push them to your remote and see them reflected in the open PR.

|

Unless there is a merge conflict (usually detected by DrahtBot), don’t rebase your changes on master branch before pushing. If you avoid rebases on upstream, Github will show a very useful "Compare" button which reviewers can often use to quickly re-ACK the new changes if they are sufficiently small. If you do rebase, this button becomes useless, as all the rebased changes from master get included, and so a full re-review may be needed. And developer review time is currently our major bottleneck in the project! |

Continuous integration

When PRs are submitted against the primary Bitcoin Core repo a series of CI tests will automatically be run.

These include a series of linters and formatters such as clang-format, flake8 and shellcheck.

It’s possible (and advised) to run these checks locally against any changes you make before you push them.

In order to run the lints yourself you’ll have to first make sure your python environment and system have the packages listed in the CI install script. You can then run a decent sub-set of the checks by running:

python test/lint/lint-circular-dependencies.py

# requires requires 'flake8', 'mypy', 'pyzmq', 'codespell', 'vulture'

python test/lint/lint-python.py

python test/lint/lint-whitespace.pyOr you can run all checks with:

python test/lint/all-lint.py

Previously these checks were shell scripts (*.sh), but they have now been migrated to python on master.

|

+

If you are following with tag v23.0 these may still exist as *.sh.

Linting your changes reduces the chances of pushing them as a PR and then having them quickly being marked as failing CI. The GitHub PR page auto-updates the CI status.

| If you do fail a lint or any other CI check, force-pushing the fix to your branch will cancel the currently-running CI checks and restart them. |

Build issues

Some compile-time issues can be caused by an unclean build directory. The comments in issue 19330 provide some clarifications and tips on how other Contributors clean their directories, as well as some ideas for shell aliases to boost productivity.

Debugging Bitcoin Core

Fabian Jahr has created a guide on "Debugging Bitcoin Core", aimed at detailing the ways in which various Bitcoin Core components can be debugged, including the Bitcoin Core binary itself, unit tests, functional tests along with an introduction to core dumps and the Valgrind memory leak detection suite.

Of particular note to Developers are the configure flags used to build Bitcoin Core without optimisations to permit more effective debugging of the various resulting binary files.

Fabian has also presented on this topic a number of times. A transcript of his edgedevplusplus talk is available.

Codebase archaeology

When considering changing code it can be helpful to try and first understand the rationale behind why it was implemented that way originally. One of the best ways to do this is by using a combination of git tools:

-

git blame -

git log -S -

git log -G -

git log -p -

git log -L

As well as the discussions in various places on the GitHub repo.

git blame

The git blame command will show you when (and by who) a particular line of code was last changed.

For example, if we checkout Bitcoin Core at v22.0 and we are planning to make a change related to the m_addr_send_times_mutex found in src/net_processing.cpp, we might want to find out more about its history before touching it.

With git `blame we can find out the last person who touched this code:

# Find the line number for blame

$ grep -n m_addr_send_times_mutex src/net_processing.cpp

233: mutable Mutex m_addr_send_times_mutex;

235: std::chrono::microseconds m_next_addr_send GUARDED_BY(m_addr_send_times_mutex){0};

237: std::chrono::microseconds m_next_local_addr_send GUARDED_BY(m_addr_send_times_mutex){0};

4304: LOCK(peer.m_addr_send_times_mutex);$ git blame -L233,233 src/net_processing.cpp

76568a3351 (John Newbery 2020-07-10 16:29:57 +0100 233) mutable Mutex m_addr_send_times_mutex;With this information we can easily look up that commit to gain some additional context:

$ git show 76568a3351

───────────────────────────────────────

commit 76568a3351418c878d30ba0373cf76988f93f90e

Author: John Newbery <john@johnnewbery.com>

Date: Fri Jul 10 16:29:57 2020 +0100

[net processing] Move addr relay data and logic into net processingSo we’ve learned now that this mutex was moved here by John from net.{cpp|h} in it’s most recent touch. Let’s see what else we can find out about it.

git log -S

git log -S allows us to search for commits where this line was modified (not where it was only moved, for that use git log -G).

|

A 'modification' (vs. a 'move') in git parlance is the result of uneven instances of the search term in the commit diffs' add/remove sections. This implies that this term has either been added or removed in the commit. |

$ git log -S m_addr_send_times_mutex

───────────────────────────────────────

commit 76568a3351418c878d30ba0373cf76988f93f90e

Author: John Newbery <john@johnnewbery.com>

Date: Fri Jul 10 16:29:57 2020 +0100

[net processing] Move addr relay data and logic into net processing

───────────────────────────────────────

commit ad719297f2ecdd2394eff668b3be7070bc9cb3e2

Author: John Newbery <john@johnnewbery.com>

Date: Thu Jul 9 10:51:20 2020 +0100

[net processing] Extract `addr` send functionality into MaybeSendAddr()

Reviewer hint: review with

`git diff --color-moved=dimmed-zebra --ignore-all-space`

───────────────────────────────────────

commit 4ad4abcf07efefafd439b28679dff8d6bbf62943

Author: John Newbery <john@johnnewbery.com>

Date: Mon Mar 29 11:36:19 2021 +0100

[net] Change addr send times fields to be guarded by new mutexWe learn now that John also originally added this to net.{cpp|h}, before later moving it into net_processing.{cpp|h} as part of a push to separate out addr relay data and logic from net.cpp.

git log -p

git log -p (usually also given with a file name argument) follows each commit message with a patch (diff) of the changes made by that commit to that file (or files).

This is similar to git blame except that git blame shows the source of only lines currently in the file.

git log -L

The -L parameter provided to git log will allow you to trace certain lines of a file through a range given by <start,<end>.

However, newer versions of git will also allow you to provide git log -L with a function name and a file, using:

git log -L :<funcname>:<file>This will then display commits which modified this function in your pager.

git log --follow file…

One of the most famous file renames was src/main.{h,cpp} to src/validation.{h,cpp} in 2016.

If you simply run git log src/validation.h, the oldest displayed commit is one that implemented the rename.

git log --follow src/validation.h will show the same recent commits followed by the older src/main.h commits.

To see the history of a file that’s been removed, specify " — " before the file name, such as:

git log -- some_removed_file.cppPR discussion

To get even more context on the change we can leverage GitHub and take a look at the comments on the PR where this mutex was introduced (or at any subsequent commit where it was modified).

To find the PR you can either paste the commit hash (4ad4abcf07efefafd439b28679dff8d6bbf62943) into GitHub, or list merge commits in reverse order, showing oldest merge with the commit at the top to show the specific PR number e.g.:

$ git log --merges --reverse --oneline --ancestry-path 4ad4abcf07efefafd439b28679dff8d6bbf62943..upstream | head -n 1

d3fa42c79 Merge bitcoin/bitcoin#21186: net/net processing: Move addr data into net_processingReading up on PR#21186 will hopefully provide us with more context we can use.

We can see from the linked issue 19398 what the motivation for this move was.

Building from source

When building Bitcoin Core from source, there are some platform-dependant instructions to follow.

To learn how to build for your platform, visit the Bitcoin Core bitcoin/doc directory, and read the file named "build-*.md", where "*" is the name of your platform. For windows this is "build-windows.md", for macOS this is "build-osx.md" and for most linux distributions this is "build-unix.md".

There is also a guide by Jon Atack on how to compile and test Bitcoin Core.

Finally, Blockchain Commons also offer a guide to building from source.

Cleaner builds

It can be helpful to use a separate build directory e.g. build/ when compiling from source.

This can help avoid spurious Linker errors without requiring you to run make clean often.

From within your Bitcoin Core source directory you can run:

# Clean current source dir in case it was already configured

make distclean

# Make new build dir

mkdir build && cd build

# Run normal build sequence with amended path

../autogen.sh

../configure --your-normal-options-here

make -j `nproc`

make check|

To run individual functional tests using the bitcoind binary built in an out-of-source build change directory back to the root source and specify the config.ini file from within the build directory: |

Codebase documentation

Bitcoin Core uses Doxygen to generate developer documentation automatically from its annotated C++ codebase.

Developers can access documentation of the current release of Bitcoin Core online at doxygen.bitcoincore.org, or alternatively can generate documentation for their current git HEAD using make docs (see Generating Documentation for more info).

Testing

Three types of test network are available:

-

Testnet

-

Regtest

-

Signet

These three networks all use coins of zero value, so can be used experimentally.

They primary differences between the networks are as follows:

| Feature | Testnet | Regtest | Signet |

|---|---|---|---|

Mining algorithm |

Public hashing with difficulty |

Local hashing, low difficulty |

Signature from authorized signers |

Block production schedule |

Varies per hashrate |

On-demand |

Reliable intervals (default 2.5 mins) |

P2P port |

18333 |

18444 |

38333 |

RPC port |

18332 |

18443 |

38332 |

Peers |

Public |

None |

Public |

Topology |

Organic |

Manual |

Organic |

Chain birthday |

2011-02-02 |

At time of use |

2020-09-01 |

Can initiate re-orgs |

If Miner |

Yes |

No |

Primary use |

Networked testing |

Automated integration tests |

Networked testing |

Signet

Signet is both a tool that allows Developers to create their own networks for testing interactions between different Bitcoin software, and the name of the most popular of these public testing networks. Signet was codified in BIP 325.

To connect to the "main" Signet network, simply start bitcoind with the signet option, e.g. bitcoind -signet.

Don’t forget to also pass the signet option to bitcoin-cli if using it to control bitcoind, e.g. bitcoin-cli -signet your_command_here.

Instructions on how to setup your own Signet network can be found in the Bitcoin Core Signet README.md.

The Bitcoin wiki Signet page provides additional background on Signet.

Regtest

Another test network named regtest, which stands for regression test, is also available.

This network is enabled by starting bitcoind with the -regtest option.

This is an entirely self-contained mode, giving you complete control of the state of the blockchain.

Blocks can simply be mined on command by the network operator.

The functional tests use this mode, but you can also run it manually.

It provides a good means to learn and experiment on your own terms.

It’s often run with a single node but may be run with multiple co-located (local) nodes (most of the functional tests do this).

The blockchain initially contains only the genesis block, so you need to mine >100 blocks in order to have any spendable coins from a mature coinbase.

Here’s an example session (after you’ve built bitcoind and bitcoin-cli):

$ mkdir -p /tmp/regtest-datadir

$ src/bitcoind -regtest -datadir=/tmp/regtest-datadir

$ src/bitcoin-cli -regtest -datadir=/tmp/regtest-datadir getblockchaininfo

{

"chain": "regtest",

"blocks": 0,

"headers": 0,

"bestblockhash": "0f9188f13cb7b2c71f2a335e3a4fc328bf5beb436012afca590b1a11466e2206",

_(...)_

}

$ src/bitcoin-cli -regtest -datadir=/tmp/regtest-datadir createwallet testwallet

$ src/bitcoin-cli -regtest -datadir=/tmp/regtest-datadir -generate 3

{

"address": "bcrt1qpw3pjhtf9myl0qk9cxt54qt8qxu2mj955c7esx",

"blocks": [

"6b121b0c094b5e107509632e8acade3f6c8c2f837dc13c72153e7fa555a29984",

"5da0c549c3fddf2959d38da20789f31fa7642c3959a559086436031ee7d7ba54",

"3210f3a12c25ea3d8ab38c0c4c4e0d5664308b62af1a771dfe591324452c7aa9"

]

}

$ src/bitcoin-cli -regtest -datadir=/tmp/regtest-datadir getblockchaininfo

{

"chain": "regtest",

"blocks": 3,

"headers": 3,

"bestblockhash": "3210f3a12c25ea3d8ab38c0c4c4e0d5664308b62af1a771dfe591324452c7aa9",

_(...)_

}

$ src/bitcoin-cli -regtest -datadir=/tmp/regtest-datadir getbalances

{

"mine": {

"trusted": 0.00000000,

"untrusted_pending": 0.00000000,

"immature": 150.00000000

}

}

$ src/bitcoin-cli -regtest -datadir=/tmp/regtest-datadir stopYou may stop and restart the node and it will use the existing state. (Simply remove the data directory to start again from scratch.)

Blockchain Commons offer a guide to Using Bitcoin Regtest.

Testnet

Testnet is a public bitcoin network where mining is performed in the usual way (hashing) by decentralized miners.

However, due to much lower hashrate (than mainnet), testnet is susceptible extreme levels of inter-block volatility due to the way the difficulty adjustment (DA) works: if a mainnet-scale miner wants to "test" their mining setup on testnet then they may cause the difficulty to increase greatly. Once the miner has concluded their tests they may remove all hashpower from the network at once. This can leave the network with a high difficulty which the DA will take a long time to compensate for.

Therefore a "20 minute" rule was introduced such that the difficulty would reduce to the minimum for one block before returning to its previous value. This ensures that there are no intra-block times greater than 20 minutes.

However there is a bug in the implementation which means that if this adjustment occurs on a difficulty adjustment block the difficulty is lowered to the minimum for one block but then not reset, making it permanent rather than a one-off adjustment. This will result in a large number of blocks being found until the DA catches up to the level of hashpower on the network.

It’s usually preferable to test private changes on a local regtest, or public changes on a Signet for this reason.

Manual testing while running a functional test

Running regtest as described allows you to start from scratch with an empty chain, empty wallet, and no existing state.

An effective way to use regtest is to start a functional test and insert a python debug breakpoint.

You can set a breakpoint in a test by adding import pdb; pdb.set_trace() at the desired stopping point; when the script reaches this point you’ll see the debugger’s (Pdb) prompt, at which you can type help and see and do all kinds of useful things.

While the (Python) test is paused, you can still control the node using bitcoin-cli.

First you need to look up the data directory for the node(s), as below:

$ ps alx | grep bitcoind

0 1000 57478 57476 20 0 1031376 58604 pipe_r SLl+ pts/10 0:06 /g/bitcoin/src/bitcoind -datadir=/tmp/bitcoin_func_test_ovsi15f9/node0 -logtimemicros -debug (...)

0 1000 57479 57476 20 0 965964 58448 pipe_r SLl+ pts/10 0:06 /g/bitcoin/src/bitcoind -datadir=/tmp/bitcoin_func_test_ovsi15f9/node1 -logtimemicros -debug (...)With the -datadir path you can look at the bitcoin.conf files within the data directories to see what config options are being specified for the test (there’s always regtest=1) in addition to the runtime options, which is a good way to learn about some advanced uses of regtest.

In addition to this, we can use the -datadir= option with bitcoin-cli to control specific nodes, e.g.:

$ src/bitcoin-cli -datadir=/tmp/bitcoin_func_test_ovsi15f9/node0 getblockchaininfoGetting started with development

One of the roles most in-demand from the project is that of code review, and in fact this is also one of the best ways of getting familiarized with the codebase too! Reviewing a few PRs and adding your review comments to the PR on GitHub can be really valuable for you, the PR author and the bitcoin community. This Google Code Health blog post gives some good advice on how to go about code review and getting past "feeling that you’re not as smart as the programmer who wrote the change". If you’re going to ask some questions as part of review, try and keep questions respectful.

There is also a Bitcoin Core PR Review Club held weekly on IRC which provides an ideal entry point into the Bitcoin Core codebase. A PR is selected, questions on the PR are provided beforehand to be discussed on irc.libera.chat #bitcoin-core-pr-reviews IRC room and a host will lead discussion around the changes.

Aside from review, there are 3 main avenues which might lead you to submitting your own PR to the repository:

-

Finding a

good first issue, as tagged in the issue tracker -

Fixing a bug

-

Adding a new feature (that you want for yourself?)

Choosing a "good first issue" from an area of the codebase that seems interesting to you is often a good approach. This is because these issues have been somewhat implicitly "concept ACKed" by other Contributors as "something that is likely worth someone working on". Don’t confuse this for meaning that if you work on it that it is certain to be merged though.

If you don’t have a bug fix or new feature in mind and you’re struggling to find a good first issue which looks suitable for you, don’t panic. Instead keep reviewing other Contributors' PRs to continue improving your understanding of the process (and the codebase) while you watch the Issue tracker for something which you like the look of.

When you’ve decided what to work on it’s time to take a look at the current behaviour of that part of the code and perhaps more importantly, try to understand why this was originally implemented in this way. This process of codebase "archaeology" will prove invaluable in the future when you are trying to learn about other parts of the codebase on your own.

#bitcoin-core-dev IRC channel

The Bitcoin Core project has an IRC channel #bitcoin-core-dev available on the Libera.chat network.

If you are unfamiliar with IRC there is a short guide on how to use it with a client called Matrix here.

IRC clients for all platforms and many terminals are available.

"Lurking" (watching but not talking) in the IRC channel can both be a great way to learn about new developments as well as observe how new technical changes and issues are described and thought about from other developers with an adversarial mindset. Once you are comfortable with the rules of the room and have questions about development then you can join in too!

|

This channel is reserved for discussion about development of the Bitcoin Core software only, so please don’t ask general Bitcoin questions or talk about the price or other things which would be off topic in there. There are plenty of other channels on IRC where those topics can be discussed. |

There are also regular meetings held on #bitcoin-core-dev which are free and open for anyone to attend. Details and timings of the various meetings are found here.

Communication

In reality there are no hard rules on choosing a discussion forum, but in practice there are some common conventions which are generally followed:

-

If you want to discuss the codebase of the Bitcoin Core implementation, then discussion on either the GitHub repo or IRC channel is usually most-appropriate.

-

If you want to discuss changes to the core bitcoin protocol, then discussion on the mailing list is usually warranted to solicit feedback from (all) bitcoin developers, including the many of them that do not work on Bitcoin Core directly.

-

If mailing list discussions seem to indicate interest for a proposal, then creation of a BIP usually follows.

-

If discussing something Bitcoin Core-related, there can also be a question of whether it’s best to open an Issue, to first discuss the problem and brainstorm possible solution approaches, or whether you should implement the changes as you see best first, open a PR, and then discuss changes in the PR. Again, there are no hard rules here, but general advice would be that for potentially-controversial subjects, it might be worth opening an Issue first, before (potentially) wasting time implementing a PR fix which is unlikely to be accepted.

Regarding communication timelines it is important to remember that many contributors are unpaid volunteers, and even if they are sponsored or paid directly, nobody owes you their time. That being said, often during back-and-forth communication you might want to nudge somebody for a response and it’s important that you do this in a courteous way. There are again no hard rules here, but it’s often good practice to give somebody on the order of a few days to a week to respond. Remember that people have personal lives and often jobs outside of Bitcoin development.

ACK / NACK

If you are communicating on an Issue or PR, you might be met with "ACK"s and "NACK"s (or even "approach (N)ACK" or similar). ACK, or "acknowledge" generally means that the commenter approves with what is being discussed previously. NACK means they tend to not approve.

What should you do if your PR is met with NACKs or a mixture of ACKs and NACKs? Again there are no hard rules but generally you should try to consider all feedback as constructive criticism. This can feel hard when veteran contributors appear to drop by and with a single "NACK" shoot down your idea, but in reality it presents a good moment to pause and reflect on why someone is not agreeing with the path forward you have presented.

Although there are again no hard "rules" or "measurement" systems regarding (N)ACKs, maintainers — who’s job it is to measure whether a change has consensus before merging — will often use their discretion to attribute more weight behind the (N)ACKs of contributors that they feel have a good understanding of the codebase in this area.

This makes sense for many reasons, but lets imagine the extreme scenario where members of a Reddit/Twitter thread (or other group) all "brigade" a certain pull request on GitHub, filling it with tens or even hundreds of NACKs… In this scenario it makes sense for a maintainer to somewhat reduce the weighting of these NACKs vs the (N)ACKs of regular contributors:

We are not sure which members of this brigade:

-

Know how to code and with what competency

-

Are familiar with the Bitcoin Core codebase

-

Understand the impact and repercussions of the change

Whereas we can be more sure that regular contributors and those respondents who are providing additional rationale in addition to their (N)ACK, have some understanding of this nature. Therefore it makes sense that we should weight regular contributors' responses, and those with additional compelling rationale, more heavily than GitHub accounts created yesterday which reply with a single word (N)ACK.

From this extreme example we can then use a sliding scale to the other extreme where, if a proven expert in this area is providing a lone (N)ACK to a change, that we should perhaps step back and consider this more carefully.

Does this mean that your views as a new contributor are likely to be ignored? No!! However it might mean that you might like to include rationale in any ACK/NACK comments you leave on PRs, to give more credence to your views.

When others are (N)ACK-ing your work, you should not feel discouraged because they have been around longer than you. If they have not left rationale for the comment, then you should ask them for it. If they have left rationale but you disagree, then you can politely state your reasons for disagreement.

In terms of choosing a tone, the best thing to do it to participate in PR review for a while and observe the tone used in public when discussing changes.

Backports

Bitcoin Core often backports fixes for bugs and soft fork activations into previous software releases.

Generally maintainers will handle backporting for you, unless for some reason the process will be too difficult. If this point is reached a decision will be made on whether the backport is abandoned, or a specific (new) fix is created for the older software version.

Reproducible Guix builds

Bitcoin Core uses the Guix package manager to achieve reproducible builds. Carl Dong gave an introduction to GUIX via a remote talk in 2019, and also discussed it further on a ChainCode podcast episode.

There are official instructions on how to run a Guix build in the Bitcoin Core repo which you should certainly follow for your first build, but once you have managed to set up the Guix environment (along with e.g. MacOS SDK), hebasto provides a more concise workflow for subsequent or repeated builds in his gist.

Software Life-cycle

An overview of the software life-cycle for Bitcoin Core can be found at https://bitcoincore.org/en/lifecycle/

Organisation & roles

The objective of the Bitcoin Core Organisation is to represent an entity that is decentralized as much as practically possible on a centralised platform. One where no single Contributor, Member, or Maintainer has unilateral control over what is/isn’t merged into the project. Having multiple Maintainers, Members, Contributors, and Reviewers gives this objective the best chance of being realised.

Contributors

Anyone who contributes code to the codebase is labelled a Contributor by GitHub and also by the community. As of Version 23.0 of Bitcoin Core, there are > 850 individual Contributors credited with changes.

Members

Some Contributors are also labelled as Members of the Bitcoin organisation. There are no defined criteria for becoming a Member of the organisation; persons are usually nominated for addition or removal by current Maintainer/Member/Admin on an ad-hoc basis. Members are typically frequent Contributors/Reviewers and have good technical knowledge of the codebase.

Some members also have some additional permissions over Contributors, such as adding/removing tags on issues and Pull Requests (PRs); however, being a Member does not permit you to merge PRs into the project. Members can also be assigned sections of the codebase in which they have specific expertise to be more easily requested for review as Suggested Reviewers by PR authors.

Maintainers

Some organisation Members are also project Maintainers. The number of maintainers is arbitrary and is subject to change as people join and leave the project, but has historically been less than 10. PRs can only be merged into the main project by Maintainers. While this might give the illusion that Maintainers are in control of the project, the Maintainers' role dictates that they should not be unilaterally deciding which PRs get merged and which don’t. Instead, they should be determining the mergability of changes based primarily on the reviews and discussions of other Contributors on the GitHub PR.

Working on that basis, the Maintainers' role becomes largely janitorial. They are simply executing the desires of the community review process, a community which is made up of a decentralized and diverse group of Contributors.

Organisation fail-safes

It is possible for a "rogue PR" to be submitted by a Contributor; we rely on systematic and thorough peer review to catch these. There has been discussion on the mailing list about purposefully submitting malicious PRs to test the robustness of this review process.

In the event that a Maintainer goes rogue and starts merging controversial code, or conversely, not merging changes that are desired by the community at large, then there are two possible avenues of recourse:

-

Have the Lead Maintainer remove the malicious Maintainer

-

In the case that the Lead Maintainer themselves is considered to be the rogue agent: fork the project to a new location and continue development there.

BIPs

Bitcoin uses Bitcoin Improvement Proposals (BIPs) as a design document for introducing new features or behaviour into bitcoin. Bitcoin Magazine describes what a BIP is in their article What Is A Bitcoin Improvement Proposal (BIP), specifically highlighting how BIPs are not necessarily binding documents required to achieve consensus.

The BIPs are currently hosted on GitHub in the bitcoin/bips repo.

|

BIP process

The BIPs include BIP 2 which self-describes the BIP process in more detail. Of particular interest might be the sections BIP Types and BIP Workflow. |

What does having a BIP number assigned to an idea mean

Bitcoin Core issue #22665 described how BIP125 was not being strictly adhered to by Bitcoin Core. This raised discussion amongst developers about whether the code (i.e. "the implementation") or the BIP itself should act as the specification, with most developers expressing that they felt that "the code was the spec" and any BIP generated was merely a design document to aid with re-implementation by others, and should be corrected if necessary.

| This view was not completely unanimous in the community. |

For consensus-critical code most Bitcoin Core Developers consider "the code is the spec" to be the ultimate source of truth, which is one of the reasons that recommending running other full node implementations can be so difficult. A knock-on effect of this was that there were calls for review on BIP2 itself, with respect to how BIPs should be updated/amended. Newly-appointed BIP maintainer Karl-Johan Alm (a.k.a. kallewoof) posted his thoughts on this to the bitcoin-dev mailing list.

In summary a BIP represents a design document which should assist others in implementing a specific feature in a compatible way. These features are optional to usage of Bitcoin, and therefore implementation of BIPs are not required to use Bitcoin, only to remain compatible. Simply being assigned a BIP does not mean that an idea is endorsed as being a "good" idea, only that it is fully-specified in a way that others could use to re-implement. Many ideas are assigned a BIP and then never implemented or used on the wider network.

Project stats

===============================================================================

Language Files Lines Code Comments Blanks

===============================================================================

GNU Style Assembly 1 913 742 96 75

Autoconf 23 3530 1096 1727 707

Automake 5 1803 1505 85 213

BASH 10 1772 1100 438 234

Batch 1 1 1 0 0

C 22 37994 35681 1183 1130

C Header 481 72043 43968 17682 10393

CMake 3 901 706 86 109

C++ 687 197249 153482 20132 23635

Dockerfile 2 43 32 5 6

HEX 29 576 576 0 0

Java 1 23 18 0 5

JSON 94 7968 7630 0 338

Makefile 51 2355 1823 198 334

MSBuild 2 88 87 0 1

Objective-C++ 3 186 134 20 32

Prolog 2 22 16 0 6

Python 298 66473 48859 7598 10016

Scheme 1 638 577 29 32

Shell 50 2612 1745 535 332

SVG 20 720 697 15 8

Plain Text 6 1125 0 1113 12

TypeScript 98 228893 228831 0 62

Visual Studio Pro| 16 956 940 0 16

Visual Studio Sol| 1 162 162 0 0

XML 2 53 47 0 6

-------------------------------------------------------------------------------

HTML 2 401 382 0 19

|- CSS 2 98 82 1 15

(Total) 499 464 1 34

-------------------------------------------------------------------------------

Markdown 192 33460 0 26721 6739

|- BASH 16 206 173 12 21

|- C 2 53 47 3 3

|- C++ 3 345 267 54 24

|- INI 1 7 6 0 1

|- Lisp 1 13 13 0 0

|- PowerShell 1 1 1 0 0

|- Python 2 346 280 61 5

|- Shell 3 21 17 1 3

|- XML 1 4 4 0 0

(Total) 34456 808 26852 6796

===============================================================================

Total 2103 662960 530837 77663 54460

===============================================================================Source: tokei

Exercises

Subsequent sections will contain various exercises related to their subject areas which will require controlling Bitcoin Core nodes, compiling Bitcoin Core and making changes to the code.

To prepare for this we will begin with the following exercises which will ensure that our environment is ready:

-

Build Bitcoin Core from source

-

Clone Bitcoin Core repository from GitHub

-

Check out the latest release tag (e.g.

v24.0.1) -

Install any dependencies required for your system

-

Follow the build instructions to compile the programs

-

Run

make checkto run the unit tests -

Follow the documentation to install dependencies required to run the functional tests

-

Run the functional tests

-

-

Run a

bitcoindnode in regtest mode and control it using theclitool./src/bitcoind -regtestwill start bitcoind in regtest mode. You can then control it using./src/bitcoin-cli -regtest -getinfo -

Run and control a Bitcoin Core node using the

TestShellpython class from the test framework in a Jupyter notebook-

See Running nodes via Test Framework for more information on how to do this

-

-

Review a Pull Request from the repo

-

Find a PR (which can be open or closed) on GitHub which looks interesting and/or accessible

-

Checkout the PR locally

-

Review the changes

-

Record any questions that arise during code review

-

Build the PR

-

Test the PR

-

Break a test / add a new test

-

Leave review feedback on GitHub, possibly including:

ACK/NACK

Approach

How you reviewed it

Your system specifications if relevant

Any suggested nits

-

Architecture

| This section has been updated to Bitcoin Core @ v23.0 |

Bitcoin Core v0.1 contained 26 source code and header files (*.h/cpp) with main.cpp containing the majority of the business logic. As of v23.0 there are more than 800 source files (excluding bench/, test/ and all subtrees), more than 200 benchmarking and cpp unit tests, and more than 200 python tests and lints.

General design principles

Over the last decade, as the scope, complexity and test coverage of the codebase has increased, there has been a general effort to not only break Bitcoin Core down from its monolithic structure but also to move towards it being a collection of self-contained subsystems. The rationale for such a goal is that this makes components easier to reason about, easier to test, and less-prone to layer violations, as subsystems can contain a full view of all the information they need to operate.

Subsystems can be notified of events relevant to them and take appropriate actions on their own. On the GUI/QT side this is handled with signals and slots, but in the core daemon this is largely still a producer/consumer pattern.

The various subsystems are often suffixed with Manager or man, e.g. CConnman or ChainstateManager.

The extra "C" in CConnman is a hangover from the Hungarian notation used originally by Satoshi.

This is being phased out as-and-when affected code is touched during other changes.

|

You can see some (but not all) of these subsystems being initialized in init.cpp#AppInitMain().

There is a recent preference to favour python over bash/sh for scripting, e.g. for linters, but many shell scripts remain in place for CI and contrib/ scripts.

bitcoind overview

The following diagram gives a brief overview of how some of the major components in bitcoind are related.

| This diagram is not exhaustive and includes simplifications. |

| dashed lines indicate optional components |

bitcoind overview| Component | Simplified description |

|---|---|

|

Manage peers' network addresses |

|

Manage network connections to peers |

|

Give clients access to chain state, fee rate estimates, notifications and allow tx submission |

|

An interface for interacting with 1 or 2 chainstates (1. IBD-verified, 2. optional snapshot) |

|

Manage net groups. Ensure we don’t connect to multiple nodes in the same ASN bucket |

|

Validate and store (valid) transactions which may be included in the next block |

|

Manage peer state and interaction e.g. processing messages, fetching blocks & removing for misbehaviour |

|

Maintains a tree of blocks on disk (via LevelDB) to determine most-work tip |

|

Manages |

bitcoin-cli overview

The following diagram gives a brief overview of the major components in bitcoin-cli.

| This diagram is not exhaustive and includes simplifications. |

bitcoin-cli overviewWallet structure

The following diagram gives a brief overview of how the wallet is structured.

| This diagram is not exhaustive and includes simplifications. |

| dashed lines indicate optional components |

| Component | Simplified description |

|---|---|

|

Represents a single wallet. Handles reads and writes to disk |

|

Base class for the below SPKM classes to override before being used by |

|

A SPKM for descriptor-based wallets |

|

A SPKM for legacy wallets |

|

An interface for a |

|

Give clients access to chain state, fee rate estimates, notifications and allow tx submission |

|

The primary wallet lock, held for atomic wallet operations |

Tests overview

| Tool | Usage |

|---|---|

unit tests |

|

functional tests |

|

lint checks |

|

fuzz |

See the documentation |

util tests |

|

Bitcoin Core is also introducing (functional) "stress tests" which challenge the program via interruptions and missing files to ensure that we fail gracefully, e.g. the tests introduced in PR#23289.

Test directory structure

The following diagram gives a brief overview of how the tests are structured within the source directory.

| This diagram is not exhaustive and includes simplifications. |

| dashed lines indicate optional components |

The fuzz_targets themselves are located in the test folder, however the fuzz tests are run via the test_runner in src/test so we point fuzz to there.

|

qa_assets are found in a separate repo altogether, as they are quite large (~3.5GB repo size and ~13.4GB on clone).

|

Test coverage

Bitcoin Core’s test coverage reports can be found here.

Threads

The main() function starts the main bitcoind process thread, usefully named bitcoind.

All subsequent threads are currently started as children of the bitcoind thread, although this is not an explicit design requirement.

The Bitcoin Core Developer docs contains a section on threads, which is summarised below in two tables, one for net threads, and one for other threads.

| Name | Function | Description |

|---|---|---|

|

|

Responsible for starting up and shutting down the application, and spawning all sub-threads |

|

|

Loads blocks from |

|

|

Parallel script validation threads for transactions in blocks |

|

|

Libevent thread to listen for RPC and REST connections |

|

|

HTTP worker threads. Threads to service RPC and REST requests |

|

|

Indexer threads. One thread per indexer |

|

|

Does asynchronous background tasks like dumping wallet contents, dumping |

|

|

Libevent thread for tor connections |

Net threads

| Name | Function | Description |

|---|---|---|

|

|

Application level message handling (sending and receiving). Almost all |

|

|

Loads addresses of peers from the |

|

|

Universal plug-and-play startup/shutdown |

|

|

Sends/Receives data from peers on port 8333 |

|

|

Opens network connections to added nodes |

|

|

Initiates new connections to peers |

|

|

Listens for and accepts incoming I2P connections through the I2P SAM proxy |

Thread debugging

In order to debug a multi-threaded application like bitcoind using gdb you will need to enable following child processes.

Below is shown the contents of a file threads.brk which can be sourced into gdb using source threads.brk, before you start debugging bitcoind.

The file also loads break points where new threads are spawned.

set follow-fork-mode child

break node::ThreadImport

break StartScriptCheckWorkerThreads

break ThreadHTTP

break StartHTTPServer

break ThreadSync

break SingleThreadedSchedulerClient

break TorControlThread

break ThreadMessageHandler

break ThreadDNSAddressSeed

break ThreadMapPort

break ThreadSocketHandler

break ThreadOpenAddedConnections

break ThreadOpenConnections

break ThreadI2PAcceptIncomingLibrary structure

Bitcoin Core compilation outputs a number of libraries, some which are designed to be used internally, and some which are designed to be re-used by external applications.

The internally-used libraries generally have unstable APIs making them unsuitable for re-use, but libbitcoin_consensus and libbitcoin_kernel are designed to be re-used by external applications.

Bitcoin Core has a guide which describes the various libraries, their conventions, and their various dependencies. The dependency graph is shown below for convenience, but may not be up-to-date with the Bitcoin Core document.

It follows that API changes to the libraries which are internally-facing can be done slightly easier than for libraries with externally-facing APIs, for which more care for compatibility must be taken.

Source code organization

Issue #15732 describes how the Bitcoin Core project is striving to organize libraries and their associated source code, copied below for convenience:

Here is how I am thinking about the organization:

libbitcoin_server.a,libbitcoin_wallet.a, andlibbitcoinqt.ashould all be terminal dependencies. They should be able to depend on other symbols in other libraries, but no other libraries should depend on symbols in them (and they shouldn’t depend on each other).

libbitcoin_consensus.ashould be a standalone library that doesn’t depend on symbols in other libraries mentioned here

libbitcoin_common.aandlibbitcoin_util.aseem very interchangeable right now and mutually depend on each other. I think we should either merge them into one library, or create a new top-levelsrc/common/directory complementingsrc/util/, and start to distinguish general purpose utility code (like argument parsing) from bitcoin-specific utility code (like formatting bip32 paths and using ChainParams). Both these libraries can be depended on bylibbitcoin_server.a,libbitcoin_wallet.a, andlibbitcoinqt.a, and they can depend onlibbitcoin_consensus.a. If we want to split util and common up, as opposed to merging them together, then util shouldn’t depend on libconsensus, but common should.Over time, I think it’d be nice if source code organization reflected library organization . I think it’d be nice if all

libbitcoin_utilsource files lived insrc/util, alllibbitcoin_consensus.asource files lived insrc/consensus, and alllibbitcoin_server.acode lived insrc/node(and maybe the library was calledlibbitcoin_node.a).

You can track the progress of these changes by following links from the issue to associated PRs.

The libbitcoin-kernel project will provide further clean-ups and improvements in this area.

If you want to explore for yourself which sources certain libraries require on the current codebase, you can open the file src/Makefile.am and search for _SOURCES.

Userspace files

Bitcoin Core stores a number of files in its data directory ($DATADIR) at runtime.

Block and undo files

- $DATADIR/blocks/blk*.dat

-

Stores raw network-format block data in order received.

- $DATADIR/blocks/rev*.dat

-

Stores block "undo" data in order processed.

You can see blocks as 'patches' to the chain state (they consume some unspent outputs, and produce new ones), and see the undo data as reverse patches. They are necessary for rolling back the chainstate, which is necessary in case of reorganisations.

— Pieter Wuille

stackexchange

Indexes

With data from the raw block* and rev* files, various LevelDB indexes can be built. These indexes enable fast lookup of data without having to rescan the entire block chain on disk.

Some of these databases are mandatory and some of them are optional and can be enabled using run-time configuration flags.

- Block Index

-

Filesystem location of blocks + some metadata

- Chainstate Index

-

All current UTXOs + some metadata

- Tx Index

-

Filesystem location of all transactions by txid

- Block Filter Index

-

BIP157 filters, hashes and headers

- Coinstats Index

-

UTXO set statistics

| Name | Location | Optional | Class |

|---|---|---|---|

Block Index |

$DATADIR/blocks/index |

No |

|

Chainstate Index |

$DATADIR/chainstate |

No |

|

Tx Index |

$DATADIR/indexes/txindex |

Yes |

|

Block Filter Index |

$DATADIR/indexes/blockfilter/<filter name> |

Yes |

|

Coinstats Index |

$DATADIR/indexes/coinstats |

Yes |

|

Deep technical dive

lsilva01 has written a deep technical dive into the architecture of Bitcoin Core as part of the Bitcoin Core Onboarding Documentation in Bitcoin Architecture.

Once you’ve gained some insight into the architecture of the program itself you can learn further details about which code files implement which functionality from the Bitcoin Core regions document.

James O’Beirne has recorded 3 videos which go into detail on how the codebase is laid out, how the build system works, what developer tools there are, as well as what the primary function of many of the files in the codebase are:

ryanofsky has written a handy guide covering the different libraries contained within Bitcoin Core, along with some of their conventions and a dependency graph for them. Generally speaking, the desire is for the Bitcoin Core project to become more modular and less monolithic over time.

Subtrees

Several parts of the repository (LevelDB, crc32c, secp256k1 etc.) are subtrees of software maintained elsewhere.

Some of these are maintained by active developers of Bitcoin Core, in which case changes should go directly upstream without being PRed directly against the project. They will be merged back in the next subtree merge.

Others are external projects without a tight relationship with our project.

There is a tool in test/lint/git-subtree-check.sh to check a subtree directory for consistency with its upstream repository.

See the full subtrees documentation for more information.

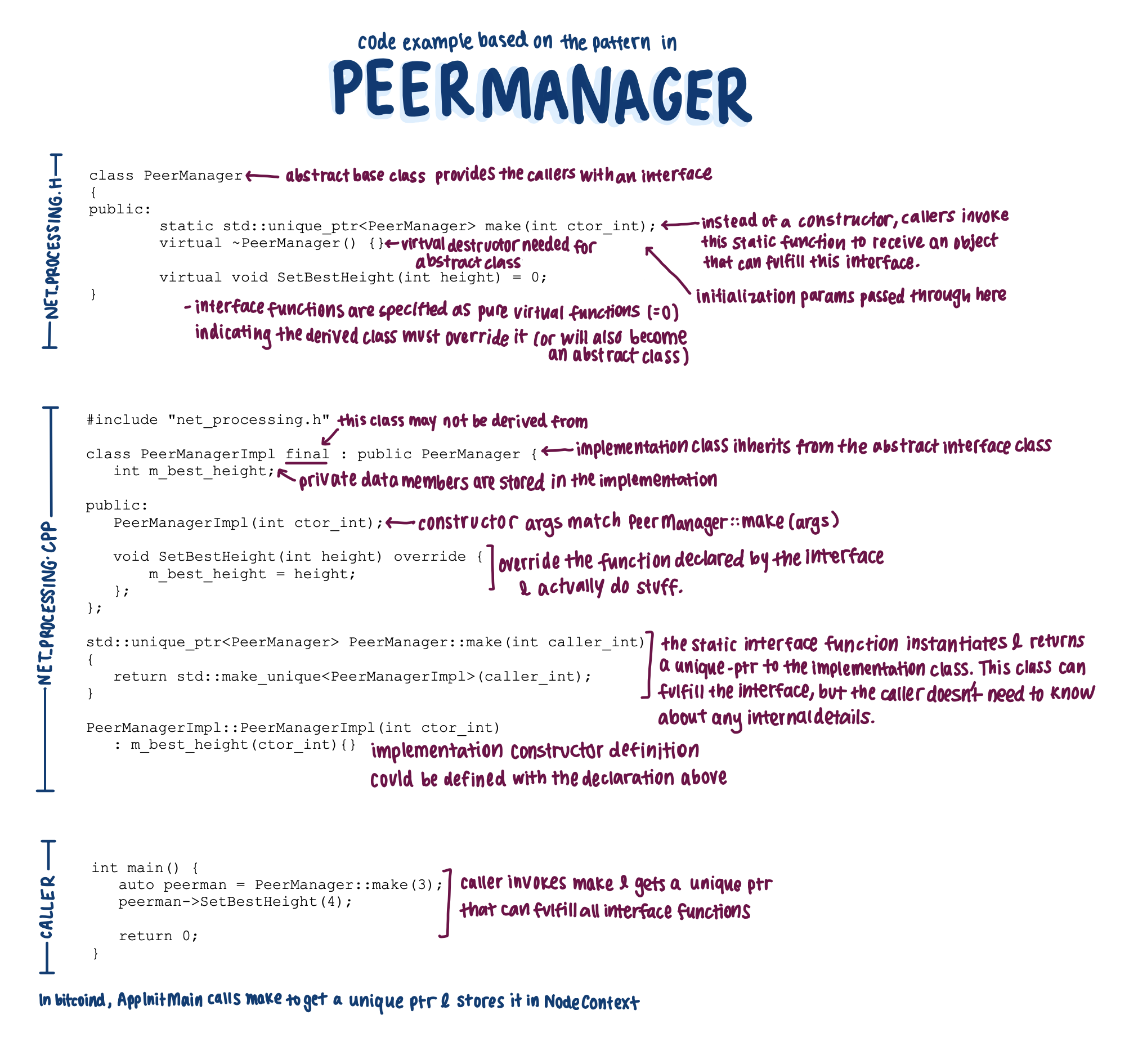

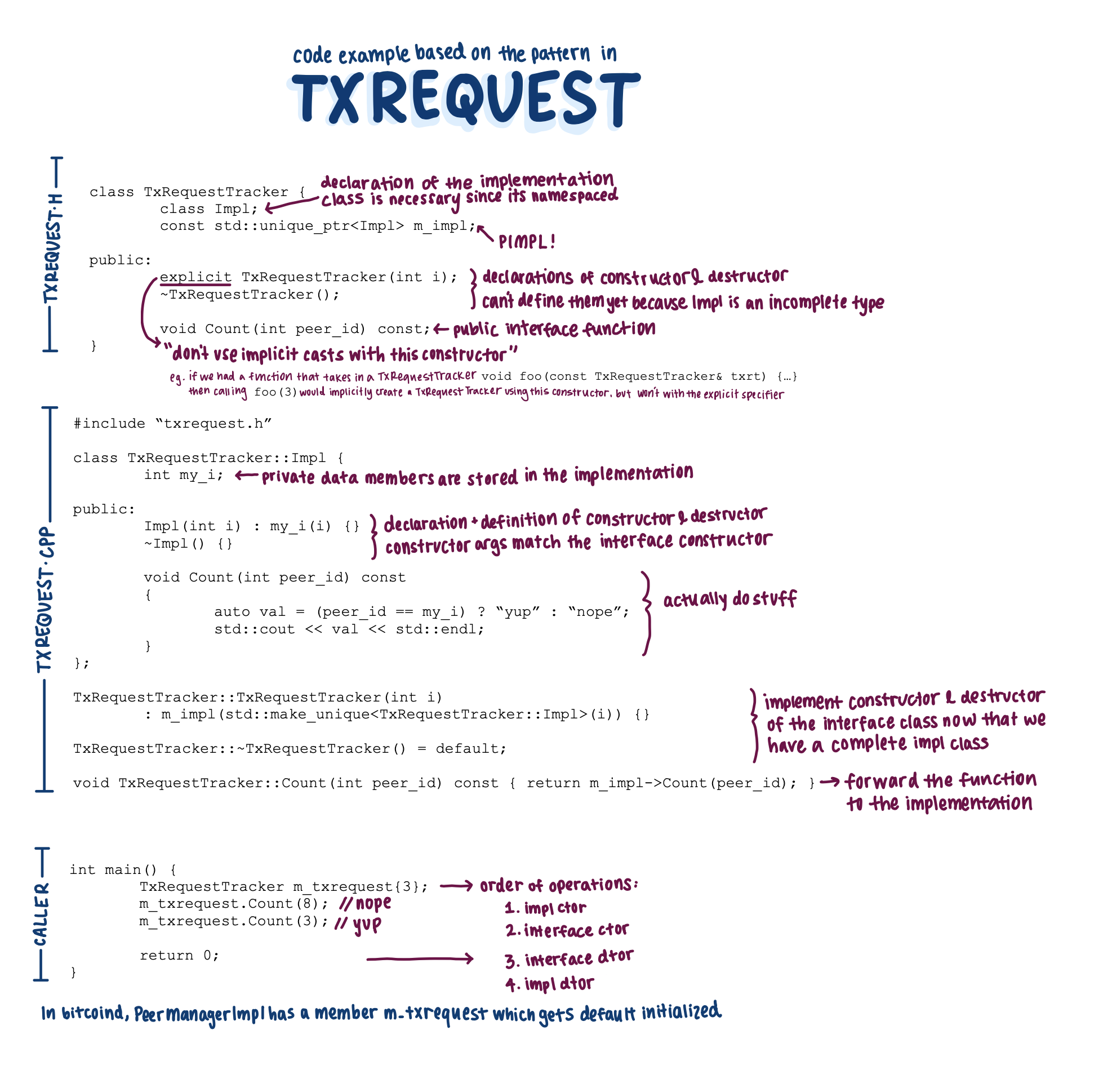

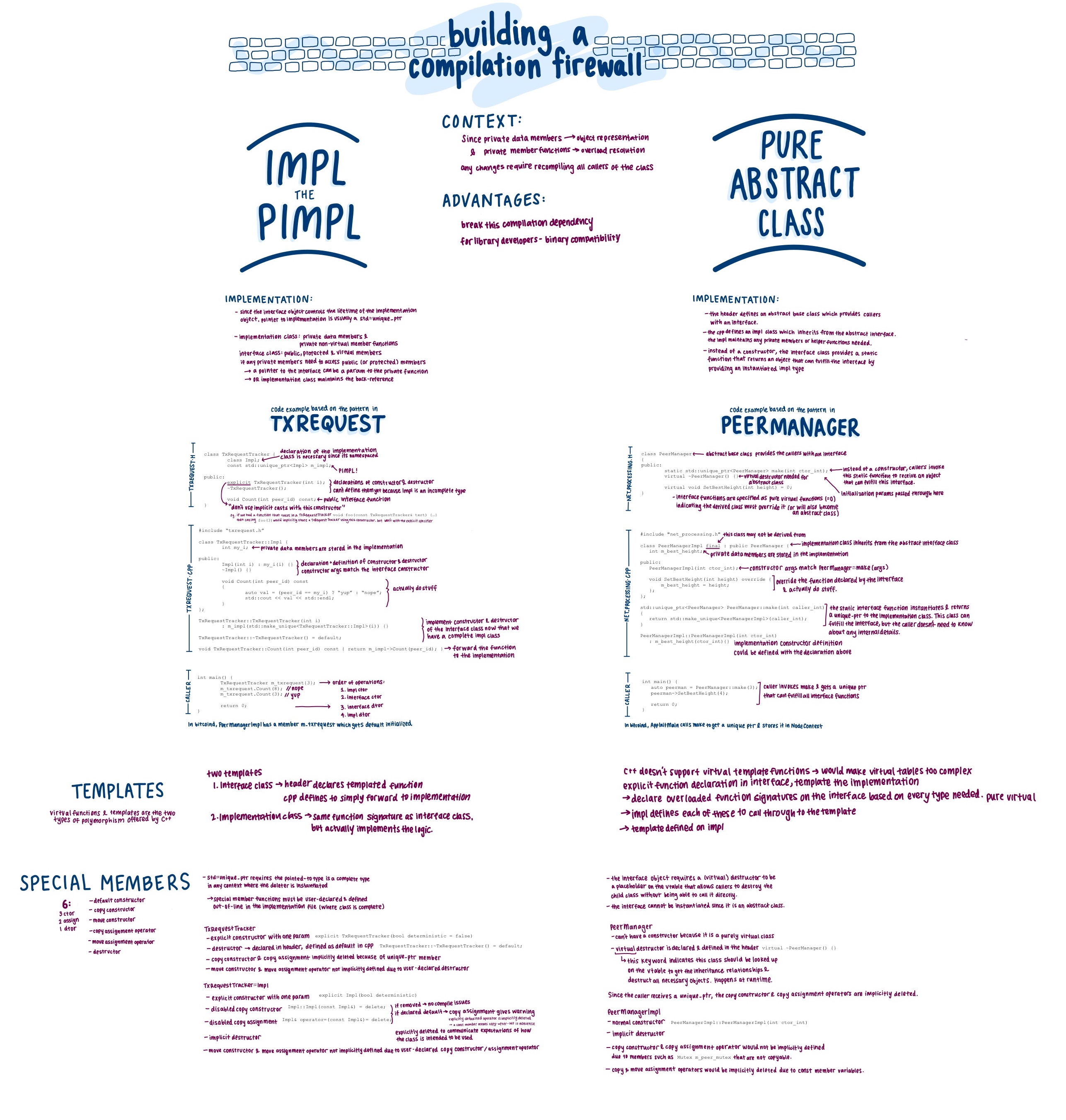

Implementation separation

Many of the classes found throughout the codebase use the PIMPL technique to separate their implementation from the external representation. See PIMPL technique in the Appendix for more information.

Consensus & validation

| This section has been updated to Bitcoin Core @ v23.0 |

One of the fundamental concepts underlying bitcoin is that nodes on the network are able to maintain decentralized consensus on the ordering of transactions in the system.

The primary mechanism at work is that all nodes validate every block, and every transaction contained within that block, against their own copy of the consensus rules. The secondary mechanism is that in the event of a discrepancy between two competing chain tips nodes should follow the chain with the most cumulative proof-of-work. The result is that all honest nodes in the network will eventually converge onto a single, canonical, valid chain.

| If all nodes do not compute consensus values identically (including edge cases) a chainsplit will result. |

For more information on how the bitcoin networks' decentralized consensus mechanism works see the Mastering Bitcoin section on decentralized consensus.

| In Bitcoin Core there are an extra level of validation checks applied to incoming transactions in addition to consensus checks called "policy" which have a slightly different purpose, see Consensus vs Policy for more information on the differences between the two. |

- Consensus

-

A collection of functions and variables which must be computed identically to all other nodes on the network in order to remain in consensus and therefore on the main chain.

- Validation

-

Validation of blocks, transactions and scripts, with a view to permitting them to be added to either the blockchain (must pass consensus checks) or our local mempool (must pass policy checks).

Consensus in Bitcoin Core

Naturally one might assume that all code related to consensus could be found in the src/consensus/ directory, however this is not entirely the case. Components of consensus-related code can be found across the Bitcoin Core codebase in a number of files, including but not limited to:

📂 bitcoin

📂 src

📂 consensus

📂 script

📄interpreter.cpp

📄 validation.h

📄 validation.cppConsensus-critical functions can also be found in proximity to code which could affect whether a node considers a transaction or block valid. This could extend to, for example, block storage database code.

An abbreviated list of some of the more notable consensus functions and variables is shown below.

| File | Objects |

|---|---|

src/consensus/amount.h |

|

src/consensus/consensus.h |

|

src/consensus/merkle.{h|cpp} |

|

src/consensus/params.h |

|

src/consensus/tx_check.{h|cpp} |

|

src/consensus/tx_verify.{h|cpp} |

|

src/consensus/validation.h |

|

Consensus model

The consensus model in the codebase can be thought of as a database of the current state of the blockchain. When a new block is learned about it is processed and the consensus code must determine which block is the current best. Consensus can be thought of as a function of available information — it’s output is simply a deterministic function of its input.

There are a simple set of rules for determining the best block:

-

Only consider valid blocks

-

Where multiple chains exist choose the one with the most cumulative Proof of Work (PoW)

-

If there is a tie-breaker (same height and work), then use first-seen

The result of these rules is a tree-like structure from genesis to the current day, building on only valid blocks.

Whilst this is easy-enough to reason about in theory, the implementation doesn’t work exactly like that. It must consider state, do I go forward or backwards for example.

Validation in Bitcoin Core

Originally consensus and validation were much of the same thing, in the same source file. However splitting of the code into strongly delineated sections was never fully completed, so validation.* files still hold some consensus codepaths.

Consensus vs Policy

What is the difference between consensus and policy checks? Both seem to be related to validating transactions. We can learn a lot about the answer to this question from sdaftuar’s StackExchange answer.

The answer teaches us that policy checks are a superset of validation checks — that is to say that a transaction that passes policy checks has implicitly passed consensus checks too.

Nodes perform policy-level checks on all transactions they learn about before adding them to their local mempool.

Many of the policy checks contained in policy are called from inside validation, in the context of adding a new transaction to the mempool.

Consensus and validation bugs

Consensus and validation bugs can arise both from inside the Bitcoin Core codebase itself, and from external dependencies. Bitcoin wiki lists some CVE and other Exposures.

OpenSSL consensus failure

Pieter Wuille disclosed the possibility of a consensus failure via usage of OpenSSL. The issue was that the OpenSSL signature verification was accepting multiple signature serialization formats (for the same signature) as valid. This effectively meant that a transactions' ID (txid) could be changed, because the signature contributes to the txid hash.

Click to show the code comments related to pubkey signature parsing from pubkey.cpp

/** This function is taken from the libsecp256k1 distribution and implements

* DER parsing for ECDSA signatures, while supporting an arbitrary subset of

* format violations.

*

* Supported violations include negative integers, excessive padding, garbage

* at the end, and overly long length descriptors. This is safe to use in

* Bitcoin because since the activation of BIP66, signatures are verified to be

* strict DER before being passed to this module, and we know it supports all

* violations present in the blockchain before that point.

*/

int ecdsa_signature_parse_der_lax(const secp256k1_context* ctx, secp256k1_ecdsa_signature* sig, const unsigned char *input, size_t inputlen) {

// ...

}There were a few cases to consider:

-

signature length descriptor malleation (extension to 5 bytes)

-

third party malleation: signature may be slightly "tweaked" or padded

-

third party malleation: negating the

Svalue of the signature

In the length descriptor case there is a higher risk of causing a consensus-related chainsplit. The sender can create a normal-length valid signature, but which uses a 5 byte length descriptor meaning that it might not be accepted by OpenSSL on all platforms.

| Note that the sender can also "malleate" the signature whenever they like, by simply creating a new one, but this will be handled differently than a length-descriptor-extended signature. |

In the second case, signature tweaking or padding, there is a lesser risk of causing a consensus-related chainsplit. However the ability of third parties to tamper with valid transactions may open up off-chain attacks related to Bitcoin services or layers (e.g. Lightning) in the event that they are relying on txids to track transactions.

It is interesting to consider the order of the steps taken to fix this potential vulnerability:

-

First the default policy in Bitcoin Core was altered (via

isStandard()) to prevent the software from relaying or accepting into the mempool transactions with non-DER signature encodings.

This was carried out in PR#2520. -

Following the policy change, the strict encoding rules were later enforced by consensus in PR#5713.

We can see the resulting flag in the script verification enum:

// Passing a non-strict-DER signature or one with undefined hashtype to a checksig operation causes script failure.

// Evaluating a pubkey that is not (0x04 + 64 bytes) or (0x02 or 0x03 + 32 bytes) by checksig causes script failure.

// (not used or intended as a consensus rule).

SCRIPT_VERIFY_STRICTENC = (1U << 1),Expand to see where this flag is checked in src/script/interpreter.cpp

bool CheckSignatureEncoding(const std::vector<unsigned char> &vchSig, unsigned int flags, ScriptError* serror) {

// Empty signature. Not strictly DER encoded, but allowed to provide a

// compact way to provide an invalid signature for use with CHECK(MULTI)SIG

if (vchSig.size() == 0) {

return true;

}

if ((flags & (SCRIPT_VERIFY_DERSIG | SCRIPT_VERIFY_LOW_S | SCRIPT_VERIFY_STRICTENC)) != 0 && !IsValidSignatureEncoding(vchSig)) {

return set_error(serror, SCRIPT_ERR_SIG_DER);

} else if ((flags & SCRIPT_VERIFY_LOW_S) != 0 && !IsLowDERSignature(vchSig, serror)) {

// serror is set

return false;

} else if ((flags & SCRIPT_VERIFY_STRICTENC) != 0 && !IsDefinedHashtypeSignature(vchSig)) {

return set_error(serror, SCRIPT_ERR_SIG_HASHTYPE);

}

return true;

}

bool static CheckPubKeyEncoding(const valtype &vchPubKey, unsigned int flags, const SigVersion &sigversion, ScriptError* serror) {

if ((flags & SCRIPT_VERIFY_STRICTENC) != 0 && !IsCompressedOrUncompressedPubKey(vchPubKey)) {

return set_error(serror, SCRIPT_ERR_PUBKEYTYPE);

}

// Only compressed keys are accepted in segwit

if ((flags & SCRIPT_VERIFY_WITNESS_PUBKEYTYPE) != 0 && sigversion == SigVersion::WITNESS_V0 && !IsCompressedPubKey(vchPubKey)) {

return set_error(serror, SCRIPT_ERR_WITNESS_PUBKEYTYPE);

}

return true;

}|

Do you think this approach — first altering policy, followed later by consensus — made sense for implementing the changes needed to fix this consensus vulnerability? Are there circumstances where it might not make sense? |

Database consensus

Historically Bitcoin Core used Berkeley DB (BDB) for transaction and block indices. In 2013 a migration to LevelDB for these indices was included with Bitcoin Core v0.8. What developers at the time could not foresee was that nodes that were still using BDB, all pre 0.8 nodes, were silently consensus-bound by a relatively obscure BDB-specific database lock counter.

| BDB required a configuration setting for the total number of locks available to the database. |

Bitcoin Core was interpreting a failure to grab the required number of locks as equivalent to block validation failing. This caused some BDB-using nodes to mark blocks created by LevelDB-using nodes as invalid and caused a consensus-level chain split. BIP 50 provides further explanation on this incident.

Although database code is not in close proximity to the /src/consensus region of the codebase it was still able to induce a consensus bug.

|

BDB has caused other potentially-dangerous behaviour in the past. Developer Greg Maxwell describes in a Q&A how even the same versions of BDB running on the same system exhibited non-deterministic behaviour which might have been able to initiate chain re-orgs.

An inflation bug

This Bitcoin Core disclosure details a potential inflation bug.

It originated from trying to speed up transaction validation in main.cpp#CheckTransaction() which is now consensus/tx_check.cpp#CheckTransaction(), something which would in theory help speed up IBD (and less noticeably singular/block transaction validation).

The result in Bitcoin Core versions 0.15.x → 0.16.2 was that a coin that was created in a previous block, could be spent twice in the same block by a miner, without the block being rejected by other Bitcoin Core nodes (of the aforementioned versions).

Whilst this bug originates from validation, it can certainly be described as a breach of consensus parameters.

In addition, nodes of version 0.14.x ⇐ node_version >= 0.16.3 would reject inflation blocks, ultimately resulting in a chain split provided that miners existed using both inflation-resistant and inflation-permitting clients.

Hard & Soft Forks

Before continuing with this section, ensure that you have a good understanding of what soft and hard forks are, and how they differ. Some good resources to read up on this further are found in the table below.

| Title | Resource | Link |

|---|---|---|

What is a soft fork, what is a hard fork, what are their differences? |

StackExchange |

|

Soft forks |

bitcoin.it/wiki |

|

Hard forks |

bitcoin.it/wiki |

|

Soft fork activation |

Bitcoin Optech |

|

List of consensus forks |

BitMex research |

|

A taxonomy of forks (BIP99) |

BIP |

|

Modern Soft Fork Activation |

bitcoin-dev mailing list |

|

Chain splits and Resolutions |

BitcoinMagazine guest |

When making changes to Bitcoin Core its important to consider whether they could have any impact on the consensus rules, or the interpretation of those rules. If they do, then the changes will end up being either a soft or hard fork, depending on the nature of the rule change.

| As described, certain Bitcoin Core components, such as the block database can also unwittingly introduce forking behaviour, even though they do not directly modify consensus rules. |

Some of the components which are known to alter consensus behaviour, and should therefore be approached with caution, are listed in the section consensus components.

Changes are not made to consensus values or computations without extreme levels of review and necessity. In contrast, changes such as refactoring can be (and are) made to areas of consensus code, when we can be sure that they will not alter consensus validation.

Making forking changes

There is some debate around whether it’s preferable to make changes via soft or hard fork. Each technique has advantages and disadvantages.

| Type | Advantages | Disadvantages |

|---|---|---|

Soft fork |

|

|

Hard fork |

|

|

Upgrading consensus rules with soft forks

When soft-forking in new bitcoin consensus rules it is important to consider how old nodes will interpret the new rules. For this reason the preferred method historically was to make something (e.g. an unused OPCODE which was to be repurposed) "non-standard" prior to the upgrade. Making the opcode non-standard has the effect that transaction scripts using it will not be relayed by nodes using this policy. Once the soft fork is activated policy is amended to make relaying transactions using this opcode standard policy again, so long as they comply with the ruleset of the soft fork.

Using the analogy above, we could think of OP_NOP opcodes as unsculpted areas of marble.

| Currently OP_NOP1 and OP_NOP4-NOP_NOP10 remain available for this. |

Once the opcode has been made non-standard we can then sculpt the new rule from the marble and later re-standardize transactions using the opcode so long as they follow the new rule.

This makes sense from the perspective of an old, un-upgraded node who we are trying to remain in consensus with. From their perspective they see an OP_NOP performing (like the name implies) nothing, but not marking the transaction as invalid. After the soft fork they will still see the (repurposed) OP_NOP apparently doing nothing but also not failing the transaction.

However from the perspective of the upgraded node they now have two possible evaluation paths for the OP_NOP: 1) Do nothing (for the success case) and 2) Fail evaluation (for the failure case). This is summarized in the table below.

| Before soft fork | After soft fork | |

|---|---|---|

Legacy node |

1) Nothing |

1) Nothing |

Upgraded Node |

1) Nothing |

1) Nothing (soft forked rule evaluation success) |

You may notice here that there is still room for discrepancy; a miner who is not upgraded could possibly include transactions in a block which were valid according to legacy nodes, but invalid according to upgraded nodes. If this miner had any significant hashpower this would be enough to initiate a chainsplit, as upgraded miners would not follow this tip.

Repurposing OP_NOPs does have its limitations. First and foremost they cannot manipulate the stack, as this is something that un-upgraded nodes would not expect or validate identically. Getting rid of the OP_DROP requirement when using repurposed OP_NOPs would require a hard fork.

Examples of soft forks which re-purposed OP_NOPs include CLTV and CSV.

Ideally these operations would remove the subsequent object from the stack when they had finished processing it, so you will often see them followed by OP_DROP which removes the object, for example in the script used for the to_local output in a lightning commitment transaction:

OP_IF

# Penalty transaction

<revocationpubkey>

OP_ELSE

`to_self_delay`

OP_CHECKSEQUENCEVERIFY

OP_DROP

<local_delayedpubkey>

OP_ENDIF

OP_CHECKSIGThere are other limitations associated with repurposing OP_NOPs, and ideally bitcoin needed a better upgrade system…

SegWit upgrade

SegWit was the first attempt to go beyond simply repurposing OP_NOPs for upgrades.

The idea was that the scriptPubKey/redeemScript would consist of a 1 byte push opcode (0-16) followed by a data push between 2 and 40 bytes.

The value of the first push would represent the version number, and the second push the witness program.

If the conditions to interpret this as a SegWit script were matched, then this would be followed by a witness, whose data varied on whether this was a P2WPKH or P2WSH witness program.

Legacy nodes, who would not have the witness data, would interpret this output as anyonecanspend and so would be happy to validate it, whereas upgraded nodes could validate it using the additional witness against the new rules.

To revert to the statue analogy this gave us the ability to work with a new area of the marble which was entirely untouched.

The addition of a versioning scheme to SegWit was a relatively late addition which stemmed from noticing that, due to the CLEANSTACK policy rule which required exactly 1 true element to remain on the stack after execution, SegWit outputs would be of the form OP_N + DATA.

With SegWit we wanted a compact way of creating a new output which didn’t have any consensus rules associated with it, yet had lots of freedom, was ideally already non-standard, and was permitted by CLEANSTACK.

The solution was to use two pushes: according to old nodes there are two elements, which was non-standard.

The first push must be at least one byte, so we can use one of the OP_N opcodes, which we then interpret as the SegWit version.

The second is the data we have to push.

Whilst this immediately gave us new upgrade paths via SegWit versions Taproot (SegWit version 1) went a step further and declared new opcodes inside of SegWit, also evaluated as anyonecanspend by nodes that don’t support SegWit, giving us yet more soft fork upgradability.

These opcodes could in theory be used for anything, for example if there was ever a need to have a new consensus rule on 64 bit numbers we could use one of these opcodes.

Fork wish lists

There are a number of items that developers have had on their wish lists to tidy up in future fork events.

An email from Matt Corallo with the subject "The Great Consensus Cleanup" described a "wish list" of items developers were keen to tidy up in a future soft fork.

The Hard Fork Wishlist is described on this en.bitcoin.it/wiki page. The rationale for collecting these changes together, is that if backwards-incompatible (hard forking) changes are being made, then we "might as well" try and get a few in at once, as these events are so rare.

Bitcoin core consensus specification

A common question is where the bitcoin protocol is documented, i.e. specified. However bitcoin does not have a formal specification, even though many ideas have some specification (in BIPs) to aid re-implementation.

| The requirements to be compliant with "the bitcoin spec" are to be bug-for-bug compatible with the Bitcoin Core implementation. |

The reasons for Bitcoin not having a codified specification are historical; Satoshi never released one. Instead, in true "Cypherpunks write code" style and after releasing a general whitepaper, they simply released the first client. This client existed on it’s own for the best part of two years before others sought to re-implement the rule-set in other clients:

A forum post from Satoshi in June 2010 had however previously discouraged alternative implementations with the rationale:

…

I don’t believe a second, compatible implementation of Bitcoin will ever be a good idea. So much of the design depends on all nodes getting exactly identical results in lockstep that a second implementation would be a menace to the network. The MIT license is compatible with all other licenses and commercial uses, so there is no need to rewrite it from a licensing standpoint.

It is still a point of contention amongst some developers in the community, however the fact remains that if you wish to remain in consensus with the majority of (Bitcoin Core) nodes on the network, you must be exactly bug-for-bug compatible with Bitcoin Core’s consensus code.

| If Satoshi had launched Bitcoin by providing a specification, could it have ever been specified well-enough to enable us to have multiple node implementations? |

|